Prologue

Over the past year, AI's role within the Web3 ecosystem has undergone a fundamental shift. It is no longer merely an auxiliary tool that helps humans process information faster or generate analysis. Instead, AI has become a core driver for trading efficiency and decision quality, embedding itself deeply across the entire chain of trade initiation, execution, and capital flow. As large language models (LLMs), AI agents, and automated execution systems mature, trading paradigms are evolving from a traditional "human-led, machine-assisted" model toward a new frontier: "Machine-planned, machine-executed, human-supervised."

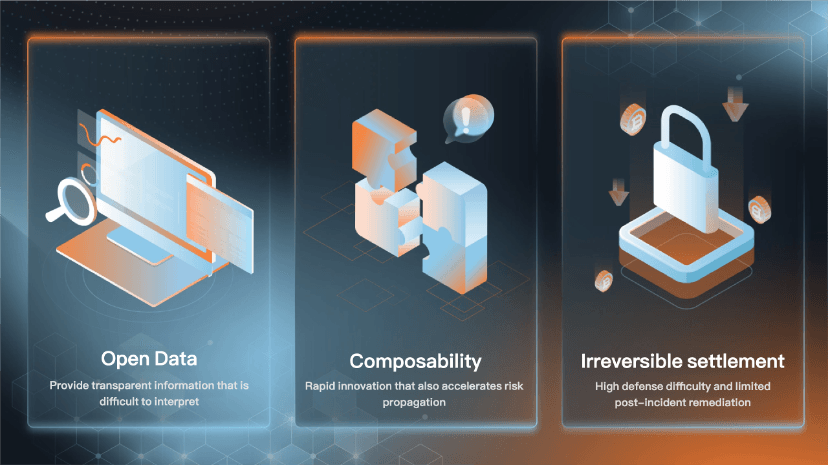

This shift is uniquely amplified by Web3's three intrinsic traits: public data, protocol composability, and irreversible settlement. Together, they create a potent duality: The promise of unprecedented efficiency gains, along with a sharply rising risk curve.

This transformation is taking shape across three distinct, concurrent realities:

- A new trading reality: AI is starting to independently handle core decision-making—identifying signals, generating strategies, and selecting execution paths. Through innovations like the x402 protocol, it facilitates direct machine-to-machine payments and calls, accelerating the rise of machine-executable trading systems.

- An escalation of risk and attack vectors: As trading and execution become fully automated, vulnerability exploitation, attack path generation, and fund laundering are also becoming automated and scalable. Risk now propagates at a speed that consistently outpaces human capacity to react and intervene.

- A redefined security, risk control, and compliance imperative: For intelligent trading to be sustainable, security, risk management, and compliance must themselves become engineered, automated, and modular. Efficiency must be matched with engineered control.

It is against this industry backdrop that BlockSec and Bitget present this report. We move past the basic question of "Should we use AI?" to address a more pressing and practical one: As trading, execution, and payments become comprehensively machine-executable, how is Web3's underlying risk structure evolving, and how must the industry rebuild its foundational security, risk control, and compliance capabilities in response? We systematically examine the critical changes and response strategies at the intersection of AI, trading, and security through three lenses: The formation of new scenarios, the amplification of new challenges, and the emergence of new opportunities.

Chapter 1: The evolution of AI and its integration with Web3

AI is evolving from an auxiliary tool into an agent system capable of planning, using tools, and executing tasks in a closed loop. Web3's native features—public data, composable protocols, irreversible settlement—magnify both the returns of automation and the costs of operational failures and malicious attacks. This fundamental characteristic dictates that discussing defense and compliance in Web3 is not merely about applying AI tools to existing processes; it constitutes a comprehensive, systemic paradigm shift—where trading, risk control, and security are all progressing in parallel towards machine-executable models.

1. AI's capability leap in financial trading and risk control: From auxiliary tool to autonomous decision system

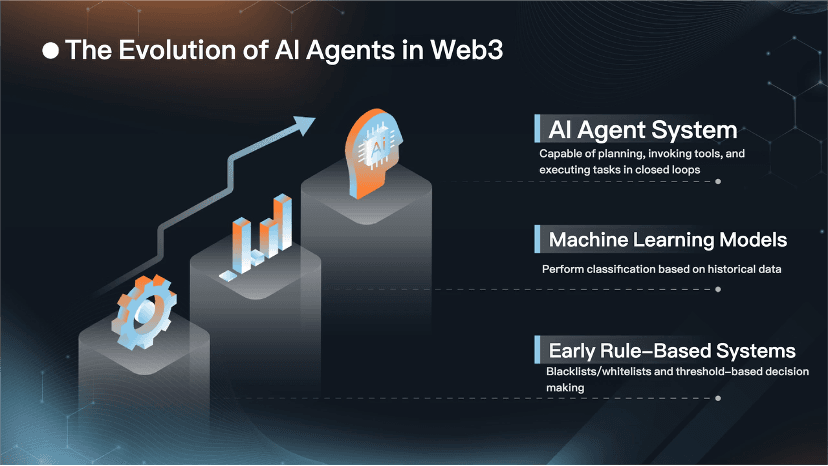

If we view the changing role of AI in financial trading and risk control as an evolutionary chain, the most critical demarcation is whether the system possesses closed-loop execution capability.

Early rule-based systems functioned more like automated tools with manual overrides. Their core logic was to translate expert knowledge into explicit threshold judgments, blacklist/whitelist management, and fixed risk control policies. This approach offered advantages in explainability and manageable governance costs. However, its drawbacks were significant: Extremely slow reaction to novel business models or adversarial attacks.

As business complexity grew, rules would accumulate uncontrollably, eventually creating an unsustainable pool of strategy debt that severely crippled system flexibility and responsiveness.

The introduction of machine learning pushed risk control into a phase of statistical pattern recognition. Through feature engineering and supervised learning, systems achieved risk scoring and behavior classification, markedly improving risk detection coverage. Yet, this model was highly dependent on historically labeled data and stable data distributions. It suffers from the classic problem of distribution drift: The patterns learned during training on historical data can become obsolete in live environments due to shifting market conditions or evolved attack methods, leading to a sharp decline in model accuracy. In essence, past experience becomes inapplicable. When attackers alter their tactics, perform cross-chain transfers, or fragment funds into smaller amounts, these models exhibit significant judgment errors.

The emergence of large language models and AI agents has introduced a revolutionary change. The core advantage of an AI agent lies not only in being smarter—possessing enhanced cognitive and reasoning abilities—but in being more capable—wielding comprehensive process orchestration and execution power. It elevates risk management from traditional single-point prediction to full-process, closed-loop handling. This encompasses a complete sequence: identifying anomalous signals, gathering corroborating evidence, linking associated addresses, understanding contract behavior logic, assessing risk exposure, generating targeted mitigation suggestions, triggering control actions, and producing auditable records. In other words, AI has evolved from indicating a potential problem to delivering the problem to an actionable state.

A parallel evolution is evident on the trading front: The shift from the traditional manual cycle of reading reports, analyzing metrics, and coding strategies, to an AI-driven, fully automated process of multi-source data ingestion, strategy generation, order execution, and post-trade analysis and optimization. The system's action chain is growing to become an autonomous decision system.

This shift, however, brings forth a critical caveat: The transition to an autonomous decision system paradigm escalates risk in tandem. Human operational errors typically exhibit low frequency and inconsistency. Machine errors, conversely, can be frequent, replicable, and capable of being triggered at scale simultaneously. Therefore, the true challenge in applying AI within financial systems is not "can it be done?" but "can it be done within clearly defined and enforceable boundaries?" These boundaries include explicit permission scopes, capital limits, permissible contract interaction ranges, and mechanisms for automatic de-escalation or emergency shutdown upon risk detection. This challenge is profoundly amplified in the Web3 domain, primarily due to the irreversibility of on-chain transactions—once an erroneous transaction or successful attack is confirmed, the associated loss of funds is often permanent.

2. The amplifying effect of Web3's technical architecture on AI: Public, composable, irreversible

As AI evolves from an assistive tool to an autonomous decision system, a pivotal question emerges: What is the combined effect when this evolution intersects with Web3? The answer: Web3's technical architecture acts as a force multiplier, amplifying both the efficiency advantages and the inherent risk of AI.

It enables exponential gains in automated trading efficiency while significantly expanding the potential scope and severity of risks. This amplifying effect stems from the confluence of Web3's three structural traits: Public data, protocol composability, and irreversible settlement.

From an upside perspective, Web3's primary allure for AI originates at the data layer. On-chain data is inherently public, transparent, verifiable, and traceable. This provides a transparency advantage for risk control and compliance that traditional finance struggles to match—one can observe the complete trajectory of fund flows, cross-protocol interaction paths, and the processes of fund aggregation and dispersion on a single, unified ledger.

Concurrently, on-chain data presents significant interpretive challenges. Addresses are "semantically sparse" (lacking clear identity markers, making it difficult to directly link them to real-world entities), the dataset contains immense noise, and data is severely fragmented across different blockchains. When legitimate transactional behavior is interwoven with obfuscated fund flows, simple rule-based systems often fail to effectively distinguish between them. Consequently, deriving meaningful insight from on-chain data becomes a high-cost engineering task in itself, requiring the deep integration of transaction sequences, contract call logic, cross-chain messaging, and off-chain intelligence to produce conclusions that are both explainable and reliable.

The more critical impacts arise from Web3's composability and irreversibility. Protocol composability dramatically accelerates the pace of financial innovation.

A trading strategy can be assembled like building blocks, flexibly combining modules for lending, decentralized exchanges (DEXs), derivatives, and cross-chain bridges to form novel financial products. However, this very characteristic also accelerates the propagation speed of risk. A minor vulnerability in one component can be rapidly amplified as it travels along the interconnected "supply chain" of protocols, and can be quickly repurposed by attackers as a reusable exploit template.

Irreversibility fundamentally alters the post-event landscape. In traditional finance, erroneous or fraudulent transactions might be remedied through cancellations, payment reversals, or inter-institutional compensation mechanisms. In Web3, once funds have completed a cross-chain transfer, entered a mixing service, or been rapidly dispersed across a multitude of addresses, the difficulty of recovery increases exponentially. This characteristic forces the industry to pivot the core focus of security and risk control from traditional post-hoc explanation to pre-event warning and real-time blocking. Effective loss mitigation now depends on the ability to intervene before or during a risk event.

3. Divergent integration paths for CEXs and DeFi: Same AI, different control planes

Understanding Web3's amplifying effect leads to a practical implementation question: While both centralized exchanges (CEXs) and decentralized finance (DeFi) protocols may integrate AI technology, their focal points for application differ substantially.

The core reason lies in the fundamental difference in the control planes (a network engineering term used here to denote the capability to intervene over funds and protocol operations) they possess. When applying AI to trading and risk management, CEXs and DeFi naturally develop different emphases. CEXs operate with a complete account system and a strong control plane. This allows them to implement KYC (Know Your Customer)/KYB (Know Your Business) procedures, impose transaction limits, and establish formalized processes for fund freezing and transaction rollback. Within the CEX context, AI's value often manifests as more efficient audit processes, more timely identification of suspicious transactions, and greater automation in generating compliance documentation and preserving audit trails.

DeFi protocols, by virtue of decentralization, operate with inherently limited intervention capabilities (a weak control plane). They cannot directly freeze a user's assets as a CEX can, functioning more as an open environment of "weak control + strong composability." Most DeFi protocols lack built-in mechanisms for freezing assets. Consequently, practical risk control is distributed across multiple points: Front-end interfaces, API layers, wallet authorization steps, and compliance middleware, such as risk-control APIs, risk address databases, and on-chain monitoring networks.

This structural reality dictates that AI applications in DeFi must prioritize real-time comprehension and early-warning capabilities. Their focus shifts to early detection of anomalous transaction paths, prompt identification of downstream risk exposures, and the rapid dissemination of risk signals to entities that do possess actionable control, such as exchanges, stablecoin issuers, law enforcement partners, or protocol governance bodies.

For instance, Tokenlon performs a KYA (Know Your Address) check on a transaction initiation address, denying service to addresses on known blacklists and thus blocking the transaction before funds move into untraceable channels.

From an engineering perspective, this divergence in control planes shapes the very nature of the AI systems built for each domain. In a CEX, AI functions primarily as a high-throughput decision-support and operational automation engine, designed to enhance the efficiency and accuracy of existing processes. In DeFi, AI operates more as a persistent, on-chain situational awareness and intelligence distribution system, whose core mandate is enabling early risk discovery and facilitating rapid, coordinated response. While both paths evolve toward agent-based systems, their underlying constraint mechanisms differ fundamentally. CEX constraints are enforced through internal governance policies and account permissions. In contrast, DeFi constraints must rely on other safeguards: Programmable authorization, transaction simulation verification, and whitelisting of permissible contract interactions.

4. AI agents, x402, and the formation of a machine-executable trading system: From bots to agent networks

Traditional trading bots were often simple automations built on fixed strategies and static interfaces. AI agents represent a leap toward generalizable executors—capable of dynamically selecting tools, orchestrating multi-step processes, and adapting their actions based on feedback.

For AI agents to operate as a true economic actor, two conditions are essential: First, well-defined, programmable boundaries for authorization and risk control, and second, machine-native interfaces for payment and settlement. The x402 protocol addresses the second condition by embedding into standard HTTP semantics. This innovation decouples the payment step from human-centric workflows, enabling AI agents and servers to execute seamless machine-to-machine transactions without the need for accounts, subscription services, or API keys.

Standardization of payment and invocation paves the way for a novel machine economy organization. AI agents will not be confined to executing single-point tasks. Instead, they form interconnected networks, seamlessly engaging in continuous cycles of "pay for invocations > acquire data > generate insight > execute trade" across multiple services. However, this standardization also leads to standardized risks: Payment standardization can foster automated fraud and money laundering service calls; strategy generation standardization can lead to the proliferation of replicable attack paths.

This underscores a critical imperative: The convergence of AI and Web3 is not a simple integration of AI models and on-chain data; it is a systemic paradigm shift. As trading and risk control evolve into machine-executable models, the industry must build a complete infrastructure for this new reality—one that ensures machines are simultaneously actionable, constrainable, auditable, and blockable. Without this foundational layer, the efficiency gains promised will be eclipsed by the uncontrolled risk.

Chapter 2: How AI reshapes Web3 trading efficiency and decision logic

1. Core challenges in the Web3 trading environment and AI's points of intervention

A fundamental structural issue in Web3 trading is liquidity fragmentation, caused by the coexistence of centralized exchanges (CEXs) and decentralized exchanges (DEXs) across disparate blockchains. This often creates a gap between the visible market price and the price/quantity one can actually trade. Here, AI serves as a critical routing layer, analyzing factors like market depth, slippage, fees, routing paths, and network latency to recommend optimal order distribution and execution paths, thereby improving trade efficiency.

The high volatility, high risk, and information asymmetry issues in the cryptocurrency market have persisted for a long time and are further amplified during event-driven market movements. AI delivers value by synthesizing fragmented information. It structures and analyzes data from project announcements, on-chain fund flows, social sentiments, and research materials, helping users form a quicker, clearer understanding of project fundamentals and risks to reduce blind spots in decision-making. While AI-assisted trading is not new, its role is deepening from a simple research aid to core strategic functions like signal identification, sentiment analysis, and strategy generation. Real-time tracking of abnormal fund flows and whale movements, quantifying social media sentiment and narrative momentum, and automatically classifying and signaling market trends (trending, sideways, expansion in volatility) are becoming scalable utilities with great value in Web3's fast-paced environment.

However, the boundaries of AI applications must be emphasized. The price efficiency and information quality of current crypto markets remain unstable. If the upstream data processed by AI contains noise, manipulation, or misattribution, it leads to the classic "garbage in, garbage out" problem. Therefore, when evaluating AI-generated trading signals, the credibility of information sources, the integrity of the logical evidence chain, the clear expression of confidence levels, and mechanisms for counterfactual verification (i.e., cross-validate signals across multiple dimensions) are more critical than the signal strength itself.

2. Industry landscape and evolution direction of Web3 trading AI tools

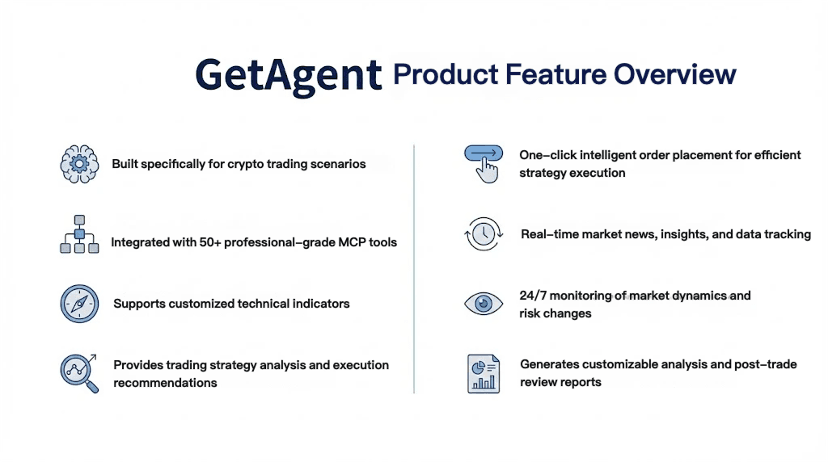

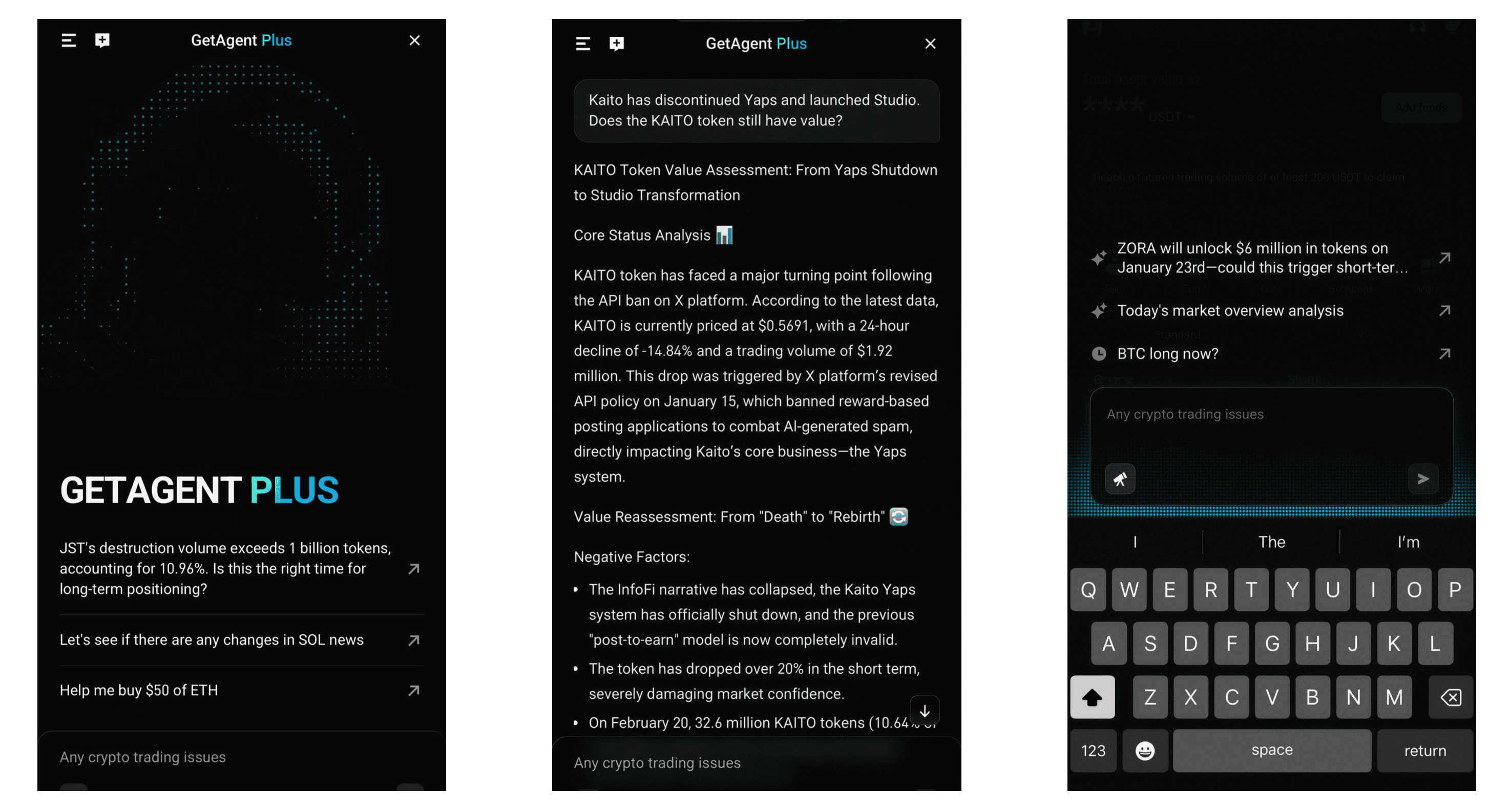

The evolution direction of AI tools embedded within exchanges is shifting from traditional market commentary toward full trade lifecycle assistance, placing greater emphasis on unified information visibility and distribution efficiency. Taking Bitget's GetAgent as an example, its positioning leans more toward a general-purpose trading information and decision-support tool.

It aims to lower the comprehension barrier by presenting key market variables, potential risk points, and core informational highlights in a more accessible format, alleviating user struggles with information acquisition and professional understanding.

On-chain bots and copy trading represent the diffusion trend of execution-side automation. Their core advantage lies in transforming professional trading strategies into replicable, standardized execution workflows, lowering the entry barrier for general users. In the future, a significant source for copy trading may come from AI-powered quantitative teams or systematic strategy providers. However, this also transforms the strategy quality question into the more complex issue of strategy sustainability and explainability. Users need to understand not just past performance but also the underlying logic, applicable scenarios, and potential risks of a strategy.

A critical issue to monitor is market capacity and strategy crowding. When large amounts of capital act simultaneously on similar signals with similar execution logic, potential returns are quickly compressed away, while market impact costs and drawdown can increase significantly. This effect is particularly amplified in on-chain trading environments, where slippage volatility, maximal extractable value (MEV), routing uncertainty, and abrupt liquidity changes can further increase the negative externality of crowded trades, often resulting in realized profits falling far short of expectations.

Therefore, a more neutral and pragmatic conclusion is: The more AI trading tools move toward automation, the more essential it becomes to combine capabilities with constraint mechanisms.

Such mechanisms include explicit strategy applicability conditions, strict risk-related limits, automatic shutdown rules under abnormal market movements, and auditable data sources and signal generation. Without these, "efficiency gains" themselves may become an amplifier of risks, exposing users to undesired losses.

3. Bitget GetAgent's role in an AI trading system

GetAgent is not positioned as a simple conversational chatbot, but rather as a trader's "second brain" in the complex liquidity environment. Its core logic is to build a closed loop of data, strategy, and execution by deeply integrating AI algorithms with real-time, multi-dimensional data. Its primary value can be summarized in four points:

GetAgent is not positioned as a simple conversational chatbot, but rather as a trader's "second brain" in the complex liquidity environment. Its core logic is to build a closed loop of data, strategy, and execution by deeply integrating AI algorithms with real-time, multi-dimensional data. Its primary value can be summarized in four points:

(1) Real-time intelligence and data tracking

Traditional workflows for monitoring news and analyzing data require users to have strong skills in web crawling, searching, and analytics — a high entry barrier. By integrating more than 50 professional tools, GetAgent enables real-time visibility into the black box of market information. It not only tracks updates from mainstream financial media in real time but also has in-depth access to several information layers, such as social media sentiment and key project team developments, ensuring that users are no longer left with information blind spots.

At the same time, GetAgent provides robust filtering and distillation capabilities. It can effectively screen out noises such as hype around low-quality tokens, and accurately extract the core variables that truly drive price movements, including critical signals like security vulnerability alerts and large token unlock schedules. Finally, GetAgent aggregates what would otherwise be fragmented information, such as on-chain transaction flows, countless announcements, and research reports, translating them into intuitive, actionable logic. For example, it may directly tell users that "although the project has great social buzz, its core developers' funds have been steadily flowing out," revealing potential risks clearly.

(2) Trading strategy generation and execution assistance

GetAgent generates customized trading strategies based on the user's specific needs, significantly lowering the barrier to trade execution and shifting trading decisions from professional-command-driven to more precise intent- and strategy-driven. Based on a user's historical trading preferences, risk tolerance, and current positions, GetAgent offers highly targeted guidance rather than generic bearish or bullish suggestions. For instance: "Given your BTC holdings and the current volatility pattern, consider running a grid bot within an X–Y range."

For complex cross-asset and cross-protocol operations, GetAgent simplifies the process with natural-language interaction. Users express their trading intent in everyday language, and GetAgent will automatically match the optimal strategy and optimize it for market depth and slippage, enabling ordinary users to participate in sophisticated Web3 trading.

(3) Synergy with automated trading systems

GetAgent is not a standalone tool, but a central decision-making node within a broader automated trading stack. Upstream, it ingests a multi-dimensional feed of on-chain data, real-time market prices, social-media sentiment, and professional research. After internal steps such as structuring, key information summarization, and correlation/logic analysis, it forms a decision framework for strategies. Downstream, it outputs precise decision references and parameter recommendations for automated trading systems, quantitative AI agents, and copy trading systems, enabling system-wide coordination and linkage.

(4) Risks and constraints behind efficiency gains

While embracing the efficiency boost enabled by AI, it is essential to remain highly vigilant about the associated risks. No matter how compelling GetAgent's signals may appear, the core principle of "AI proposes, humans approve" should remain consistent. As Bitget continues to invest in research and development and enhance AI capabilities, the team is not only focused on enabling more accurate trading recommendations from GetAgent but also actively exploring how it can justify the feasibility of its recommendations with complete, evidence-based reasoning. For example, why is a certain entry point recommended? Is it due to a confluence of technical indicators, or because abnormal inflows have appeared in on-chain whale addresses?

In Bitget's view, the long-term value of GetAgent is not merely to deliver deterministic trading conclusions, but to help traders and trading systems better identify the types of risk they are taking on, and whether the risks align with the current market, so they can make more rational trading decisions.

4. Balancing trading efficiency and risks: Security support by BlockSec

Behind AI-driven gains in trading efficiency, risk control remains a core issue that must not be overlooked. Grounded in a deep understanding of Web3 trading risks, BlockSec provides comprehensive security capabilities, helping users effectively manage potential risks while benefiting from AI-enabled trading convenience:

Addressing data noise and misattribution risk, BlockSec's Phalcon Explorer offers powerful trade simulation and multi-source cross-validation. This filters out manipulative data and false signals, and enables users to distinguish genuine market trends:

Mitigating market risk caused by strategy crowding, the fund-flow tracking capabilities of MetaSleuth identify capital concentration across similar strategies in real time, issuing early warnings of liquidity stampede risk and providing actionable suggestions for users to adjust their trading strategies.

Securing the execution layer, MetaSuites offers an approval diagnosis feature to detect abnormal approvals in real time and enables users to instantly revoke risky approvals, effectively reducing the likelihood of fund losses caused by permission abuse or erroneous execution.

Chapter 3: The evolution of Web3 offense-defense in the AI era and a new security paradigm

While AI boosts trading efficiency, it also makes attacks faster, stealthier, and more destructive. Web3‘s decentralized architecture naturally leads to fragmented responsibilities, smart contracts' composability introduces possible spillovers to systemic risks, and the widespread adoption of foundation models further lowers the skill bar for understanding vulnerabilities and generating attack paths. In this context, attacks are evolving toward end-to-end automation and industrial-scale execution.

In response, security defense must evolve from the traditional concept of "better detection" to "actionable, real-time, closed-loop handling". In the specific scenario of bots executing trades, this means engineering-focused governance across authorization management, prevention of erroneous execution, and systemic chain-reaction risks, thereby establishing a new paradigm of Web3 security fit for the AI era.

1. How AI reshapes Web3 attack methods and risk profiles

In Web3, the security struggle is not just about whether vulnerabilities exist, but is more deeply rooted in the fragmented responsibilities stemming from its decentralized architecture. Take a protocol as an example: The code is developed and deployed by its project team. The user-facing interface may be maintained by a different team. Transactions are initiated through wallets and routing protocols. Funds move across DEXs, lending protocols, cross-chain bridges, and aggregators. And finally, on/off-ramps are handled by centralized platforms. Against this backdrop, when a security incident occurs, any node in the process can claim that it should not assume full responsibility, as it has a very narrow scope of control. Attackers may effectively exploit this structural fragmentation by orchestrating a multi-stage attack chain across multiple weak nodes and creating a situation in which no single entity has global control, thereby enabling a successful attack execution.

The introduction of AI makes this structural weakness even more pronounced. Attack paths become easier for AI systems to search, generate, and reuse, and the speed of risk propagation will consistently and unprecedentedly exceed the ceiling of human coordination. By then, the traditional, human-driven incident response will be obsolete. The systemic risks introduced by vulnerabilities to the smart contract layer are not alarmist. DeFi's composability allows a small coding flaw to be amplified rapidly along dependency chains and ultimately escalate into an ecosystem-level security catastrophe. Meanwhile, the irreversible nature of on-chain fund settlement compresses the response window to minutes.

According to BlockSec's DeFi Security Incident Library, the total losses across the crypto sector from hacks and exploitations in 2024 exceeded $2 billion, with DeFi protocols remaining the primary targets. These figures clearly show that even as industry spending on security continues to rise, attacks still occur frequently, often with large single-incident losses and destructive impact. As smart contracts become a core component of the financial infrastructure, vulnerabilities are no longer merely engineering defects, but more often a type of systemic financial risk that can be maliciously weaponized.

Another aspect of how AI reshapes the attack surface is also evident: The formerly experience-driven and manual steps in the attack chain move toward end-to-end automation.

First, automation of vulnerability discovery and understanding. Foundation models are particularly good at code reading, semantic abstraction, and logical reasoning. They can quickly extract potential weak links from complex contract logic and generate precise exploit triggers, transaction sequences, and contract-call compositions, significantly lowering the skill bar for exploitation.

Second, automation of exploit-path generation. In recent years, industry research has begun to adapt large language models (LLMs) into end-to-end exploit code generators. By combining LLMs with specialized toolchains, one can build an automated process on a specific contract address and block height to collect relevant information, interpret contract behavior, generate a compilable exploit contract, and validate it against historical blockchain states. This means that effective attack techniques no longer depend solely on the manual tuning of a few top-notch security researchers, but can be engineered into scalable, production-like attack pipelines.

Broader security research further supports this trend: Given a CVE (Common Vulnerabilities and Exposures) description, GPT-4 has demonstrated a high success rate in generating working exploit code within certain test sets. This phenomenon suggests that the conversion from natural-language vulnerability descriptions to executable attack code is rapidly simplified. When exploit code generation increasingly becomes a mature capability to be called at any time, large-scale attacks will become a reality.

In Web3, the amplification effects of scaled attacks are represented in two ways:

First, pattern-based attacks, where adversaries apply the same playbook to mass-scan for contracts sharing similar architectures and vulnerability classes, identify targets, and then probe and exploit targets in batches.

Second, the formation of a supply chain of money laundering and fraud, which allows malicious actors to operate without building a full stack of infrastructure themselves. For instance, Chinese-speaking escrow-style illicit markets on platforms such as Telegram have evolved into mature marketplaces facilitating crimes. Two major illegal markets—Huione Guarantee and Xinbi Guarantee—have reportedly facilitated more than $35 billion in stablecoin transactions since 2021, spanning services such as money laundering, trading of exfiltrated data, and more severe forms of criminal services. In addition, illicit markets on Telegram offer specialized fraud tooling, including deepfake makers. This type of platform-based steady supply of services for criminal activities enables adversaries to not only generate exploit plans and paths faster, but also quickly acquire laundering toolkits for stolen funds. Now, what was a single technical exploit can be escalated into part of a full-fledged illicit industry.

2. An AI-driven security defense system

As AI upgrades the offensive playbook, it can also bring core value to the defensive side if the security capabilities that traditionally rely on human expertise can be transformed into reproducible, scalable engineering systems. Such a defense system should have three layers of capabilities as its anchor:

(1) Smart contract code analysis and automated auditing

AI's key advantage in smart contract auditing is its ability to harness fragmented audit knowledge into a structural system. Traditional static analysis and formal verification tools excel at deterministic rules, but they often struggle with complex business logic, multi-contract composability and calling, and implicit assumptions, and get stuck in a deadlock of false negatives and false positives. LLMs, however, offer clear strengths in semantic interpretation, pattern abstraction, and cross-file reasoning, making them well-suited as a pre-audit layer for rapid contract comprehension and preliminary risk surfacing.

That said, AI is not meant to replace traditional audit tooling; instead, it increasingly serves as a thread that orchestrates these tools in an efficient, automated audit pipeline. In practice, an AI model can first produce a semantic summary of the contract, possible risky items, and a plausible exploit path. It then passes the information to static or dynamic analysis tools for targeted validation. Finally, the AI consolidates validation results, evidence chain, exploit conditions, and remediation recommendations into a structured, auditable report. Such a division of labor, where AI is for understanding, tools are for validation, and humans are for decision-making, is likely to become a persistent engineering model in future smart contract auditing.

(2) Anomalous transaction detection and on-chain behavioral pattern recognition

In this domain, AI is primarily used to convert public but highly chaotic on-chain data into actionable security-relevant signals. The core challenge on-chain is not data scarcity, but noise overload: Bots trading at high frequencies, split transfers, cross-chain hops, and complex contract routing are intertwined, making simple threshold-based rules ineffective for identifying anomalies.

AI is better suited to these complex settings. With techniques such as sequence modeling and graph-based correlation analysis, AI systems can identify precursory behaviors associated with common attack classes, such as permission exceptions, unusually dense contract-call activity, or indirect ties to known risky entities. They can also continuously compute downstream risk exposure, enabling security teams to clearly track fund movements, scopes impacted, and the remaining time window for interception.

(3) Real-time monitoring and automated response

In production environments, implementing defense capabilities requires always-on security platforms rather than one-off analysis tools. BlockSec's Phalcon Security can be a good example. Its goal is not post-mortem retrospect and improvement, but to intercept risks within the response window as much as possible through three core capabilities: Real-time monitoring at blockchain and mempool levels, anomalous behavior recognition, and automated response.

Across multiple real-world Web3 attacks, Phalcon Security successfully identified potential attack signals early by continuously watching transaction behavior, contract interaction logic, and sensitive operations. It allows users to configure automated handling policies (such as pausing contracts or blocking suspicious transfers), thereby preventing risk propagation before attacks are completed. The key value of these capabilities is not simply "spotting more issues", but enabling security defenses to achieve response speeds that can match automated attacks, moving Web3 security beyond passive, audit-centric models toward proactive, real-time defense systems.

3. Security challenges and countermeasures in smart trading and machine execution scenarios

In trading, manual confirmation is gradually replaced by closed-loop machine execution. Meanwhile, the center of gravity for security moves from contract vulnerabilities toward permission management and execution-path security.

First, wallet security, private-key management, and authorization risks are significantly amplified. AI agents frequently invoke tools and contracts, which inevitably require more frequent transaction signing and more complex authorization configurations. If a private key is compromised, or an authorization scope is too broad, or an authorized object is spoofed, fund losses can escalate within a very short time. Traditional advice, such as urging users to be more cautious themselves, becomes ineffective in machine-executed workflows. These systems are designed to minimize human intervention, and as a result, users cannot monitor every automated action in real time.

At the same time, AI agents and payment protocols (such as x402) introduce more covert and subtler risks of authorization abuse and erroneous execution. Protocols like x402 allow APIs, applications, and AI agents to conduct instant stablecoin payments over HTTP, improving operational efficiency but also giving machines the ability to autonomously make payments and invoke capabilities throughout the workflow. This creates new paths for adversaries. They may disguise induced payments, calls, authorizations, and more malicious actions as normal processes in order to evade defenses.

At the same time, AI models themselves may carry out seemingly compliant but incorrect actions under prompt-injection attacks, data poisoning, or adversarial inputs. The central issue here is not whether x402 is "good" or "bad", but that the smoother and more automated the trading pipeline becomes, the more critical it is to enforce tighter permission boundaries, spending limits, revocable authorization, and complete audit and replay capabilities. Without these controls, a small error can be amplified into large-scale, automated, cascading losses.

Finally, automated trading can also trigger a systemic chain reaction. When large numbers of AI agents rely on similar signal sources and strategy templates, the aggregated resonance impact on the market would be severe. A single trigger can cause massive simultaneous buying/selling, order cancellations, or cross-chain transfers. This will materially amplify volatility and lead to large-scale liquidations and liquidity stampedes. Attackers may also exploit this homogeneity by issuing misleading signals, manipulating localized liquidity, or launching attacks on key routing protocols, triggering cascading failures both on-chain and off-chain.

In other words, machine trading escalates the traditional individual operational risk into a more destructive form of collective behavior risk. This risk may not necessarily stem from malicious attacks, but could also arise from highly consistent automated "rational decisions." When all machines make the same decision based on the same logic, systemic risks can emerge.

Therefore, a more sustainable security paradigm in the era of intelligent trading is not merely about emphasizing real-time monitoring, but about engineering concrete solutions for the three types of risks mentioned above:

- Hierarchical authorization and automatic downgrading mechanisms to strictly cap the loss limits in case of authorization control failure, ensuring that a single breach of permission does not lead to global loss.

- Pre-execution simulations and reasoning chain audit techniques to effectively intercept erroneous executions and malicious actions induced by external manipulation, ensuring that each automated trade is logically sound.

- De-homogenization strategies, circuit breaker design, and cross-entity collaboration to prevent systemic cascading effects and ensure that a single market fluctuation does not escalate into a full-scale industry crisis. Only in this way can security defenses keep pace with machine execution speed, enabling earlier, steadier, and more effective interventions at key risk points, ensuring the safe and stable operation of intelligent trading systems.

Chapter 4: AI applications in Web3 risk control, AML, and risk identification

The compliance challenges in the Web3 space are not solely driven by anonymity but are deeply intertwined with several complex factors: The tension between anonymity and traceability, the path explosion issues caused by cross-chain and multi-protocol interactions, and the fragmented responses resulting from the differing levels of control between DeFi and CEX. The core opportunity for AI in this field lies in transforming the massive amounts of on-chain noise data into actionable risk insights: By linking address profiling, fund path tracking, and contract/agent risk assessment into a complete closed loop, and turning these capabilities into real-time alerts, action orchestration, and auditable evidence chains.

With the advent of AI agents and machine payments, the compliance sector will face new challenges in protocol adaptation and responsibility definition. The evolution of RegTech (Regulatory Technology) into modular, automated interfaces will become an inevitable trend in the industry.

1. Structural challenges in Web3 risk control and compliance

(1) Conflict between anonymity and traceability

The first core conflict in Web3 compliance is the coexistence of anonymity and traceability. On-chain transactions are transparent and immutable, theoretically making every fund flow traceable. However, on-chain addresses do not directly correspond to real-world identities. Market participants can transform "traceable" into "traceable but difficult to attribute" by frequently changing addresses, splitting fund transfers, using intermediary contracts, and engaging in cross-chain activities. As a result, while fund flows can be tracked, identifying the true controllers of the funds becomes a significant challenge.

Therefore, Web3 risk control and Anti-Money Laundering (AML) cannot rely solely on account registration and centralized clearing to assign responsibility, as in traditional finance. Instead, a comprehensive risk evaluation system based on behavioral patterns and fund paths needs to be developed: Determining how to identify and group addresses from the same entity, where the funds originate, and where they go, what interactions occur within which protocols, and the true purpose behind these interactions. These details are essential to building a clear picture of risk.

(2) Compliance complexity of cross-chain and multi-protocol interactions

In Web3, fund flows rarely stay within a single chain or protocol. Instead, they often involve complex operations, such as cross-chain bridging, DEX swaps, lending, derivative trading, and additional cross-chain actions. As fund paths grow longer, compliance challenges shift from spotting a suspicious transaction to understanding the intent and outcome of the entire cross-domain path. What makes this even more difficult is that every single step in the path may appear normal (for example, standard token swaps or adding liquidity), but when these steps come together, they could serve to confuse the fund's source or support illegal cash-outs, making compliance identification particularly tough.

(3) Scenario divergence: Regulatory differences between DeFi and CEX

The third core challenge stems from the substantial gap in regulatory frameworks and enforcement capabilities between DeFi and CEX. CEXs inherently offer a strong control framework, featuring full account systems, strict deposit and withdrawal controls, and centralized risk management and fund-freezing capabilities. This makes it easier to enforce regulatory requirements via a duty-based framework.

DeFi, by contrast, operates as a public financial infrastructure with a "weaker control layer and stronger composability." In many cases, protocols themselves lack the functionality to freeze funds. Instead, the actual risk control points are scattered across several nodes, including the front-end interface, routing protocols, wallet authorizations, stablecoin issuers, and on-chain infrastructure.

This means that the same risk might manifest as suspicious deposits/withdrawals and account anomalies in the CEX environment, while in DeFi, it may appear as abnormal fund paths, contract interaction logic issues, or irregular authorization behaviors. To ensure comprehensive compliance across both scenarios, a system must be established that can understand the true intent of funds across different contexts and map control actions flexibly to various control layers.

2. AI-driven AML practices

In light of the structural challenges mentioned above, the core value of AI in the Web3 AML domain lies not in "generating compliance reports," but in transforming complex on-chain fund flows and interaction logic into actionable compliance loops: Detecting abnormal risks earlier, providing clearer explanations of risk causes, triggering enforcement actions more swiftly, and maintaining a complete, auditable evidence chain.

The first step in AML efforts involves address profiling and behavioral analysis. This process goes beyond simple labeling of addresses and involves analyzing them in a deeper behavioral context: Examining which contracts and protocols the address interacts with frequently, determining if funds come from overly concentrated sources, checking if transaction patterns show typical money-laundering patterns, such as splitting and consolidating funds, and assessing any connections to high-risk entities (such as blacklisted addresses or suspicious platforms) either directly or indirectly.

The combination of large models and graph learning techniques plays a critical role in this process by aggregating seemingly fragmented and unrelated transaction records into structured entities that are more likely to belong to the same individual or criminal network. This enables compliance actions to shift from monitoring individual addresses to focusing on the actual controlling entities, significantly improving the efficiency and accuracy of compliance processes.

Building on this, fund flow tracking and cross-chain tracing play a key role in connecting the risk intentions with their eventual consequences. Cross-chain actions are not merely about transferring tokens from chain A to chain B; they often involve asset format conversions, obfuscation of fund paths, and the introduction of new intermediary risks. The main role of AI is to track and continuously update downstream fund flows. When suspicious source funds begin to move, the system must not only track each step of their movement accurately but also assess in real time which key nodes, such as CEX deposit addresses or stablecoin issuer contracts, are being approached, identifying those that can be frozen, investigated, or intercepted. This is also the core reason why the industry increasingly emphasizes real-time alerts rather than post-event analysis: Once funds enter an irreversible diffusion phase, the cost of freezing and recovering them rises significantly, and the success rate of such actions drops substantially.

Furthermore, smart contract and AI agent behavior risk assessments extend the risk control perspective from simple fund flows to the execution logic level. The core challenge of contract risk assessment lies in the complexity of business logic and the frequency of combined function calls.

Traditional rules and static analysis tools are prone to missing implicit assumptions across functions, contracts, and protocols, leading to failure in risk identification. AI is better suited for deep semantic understanding and adversarial hypothesis generation. It can first clarify key state variables, permission boundaries, fund flow rules, external dependencies, and other core information of contracts, then simulate and validate abnormal call sequences to accurately identify potential compliance risks at the contract level.

Agent behavior risk assessment focuses more on "strategy and permission governance": What actions did the AI agent perform within its authorized scope? Did it exhibit abnormal call frequencies or scales? Did it keep executing trades under adverse market conditions, such as abnormal slippage or low liquidity? Do these actions comply with predefined compliance strategies? All such behaviors must be recorded in real time, quantified with scores, and automatically trigger downgrade or circuit breaker mechanisms when risk thresholds are breached.

To truly transform these compliance capabilities into industry productivity, a clear path to productization is required: At the foundational level, deep integration of multi-chain data and security intelligence; at the middle layer, the development of entity profiling and fund path analysis engines; at the upper layer, the provision of real-time risk alerts and enforcement process orchestration functions; at the outer layer, the output of standardized audit reports and the ability to retain evidence chains. The necessity of productization stems from the fact that the challenge in compliance and risk control lies not in the accuracy of individual analyses, but in the adaptability of continuous operations: Compliance rules evolve with regulatory demands, malicious tactics constantly escalate, and the on-chain ecosystem undergoes perpetual iteration.

Only systematic products capable of continuous learning, ongoing updates, and persistent traceability can address these dynamic changes.

To truly make on-chain risk identification and AML capabilities effective, the key lies not in the accuracy of isolated models, but in whether they can be productized into a continuously operating, auditable, and collaborative engineering system. For example, BlockSec's Phalcon Compliance product is based on the core idea of not simply tagging high-risk addresses. Instead, it links risk detection, evidence retention, and subsequent action processes into a comprehensive closed loop. This is achieved through an address labeling system, behavioral profiling, cross-chain fund path tracking, and a multi-dimensional risk scoring mechanism, providing a one-stop compliance solution for the Web3 space.

In an industry where AI and agents are extensively involved in transactions and executions, the importance of such compliance capabilities is further heightened. Risks are no longer limited to active attacks by "malicious accounts"; they can also arise from passive violations due to misexecuted automated strategies or the misuse of permissions. Shifting compliance logic to the transaction and execution chain, enabling risks to be identified and flagged before funds complete irreversible settlement, is becoming a key component of risk control systems in the era of intelligent trading.

3. New compliance in the era of machine trading

As the trading model shifts from "Human-Machine Interface (HMI)" to "machine API calls," a series of new compliance challenges arises: The focus of regulation expands beyond just the transaction behavior itself to also include the protocols and automation mechanisms on which these transactions depend. The importance of the x402 protocol discussion lies not only in making machine-to-machine payments smoother, but also in embedding payment functionality deeply into the HTTP interaction process, thus enabling the automatic settlement model of the "Agent Economy."

Once these mechanisms are scaled, the focus of compliance will shift to "under what authorization and constraints the machines make payments and execute trades," including the identity of the agent, funding limits, strategic constraints, payment purposes, and whether there are any abnormal payment cycles or inducement behaviors. All of this information must be fully recorded and auditable.

Closely following this is the challenge of defining responsibilities. The AI agent itself is not a legal entity, but it can execute transactions on behalf of individuals or institutions, potentially leading to financial losses or compliance risks. When the agent's decisions depend on external tools, data, or even paid services from third parties (like data APIs or trading execution services), determining responsibility becomes unclear, as it involves developers, operators, users, platforms, and service providers.

A more practical and actionable engineering approach is to integrate accountability traceability into the core of the system design. All high-impact actions should automatically generate a structured decision chain (including the source of trigger signals, risk assessment processes, simulation results, authorization scope, and executed transaction parameters), with version control for key strategies and parameters, and support for full playback functionality. This way, when issues arise, the root cause can be quickly pinpointed—whether it's a strategy logic error, a data input mistake, an authorization configuration issue, or a malicious attack on the toolchain.

Finally, the evolution of RegTech will shift from traditional "post-event screening tools" toward "infrastructure for continuous monitoring and enforceable controls." This means that compliance is no longer an internal process managed by a single department but a set of standardized platform capabilities: The policy layer translates regulatory requirements and risk control rules into executable code (policy-as-code); the execution layer monitors fund flows and market participants' behaviors, while the control layer manages core actions like trade delays, fund limits, risk isolation, and emergency freezes; and the collaboration layer swiftly delivers verifiable evidence to stakeholders (like exchanges, stablecoin issuers, and law enforcement) for timely action.

As machine payments and machine trading are standardized, they also remind us that compliance capabilities must undergo the same interface and automation upgrades.

Otherwise, an unbridgeable gap will emerge between the high-speed execution of machine trading and the slower response of manual compliance. AI technology offers the opportunity for risk control and AML to become the foundational infrastructure in the era of smart trading. By providing earlier warnings, faster collaboration, and more actionable technical methods, risks can be minimized within the shortest possible impact window, offering core support for the compliant development of the Web3 industry.

Conclusion

Looking back, it's clear that the integration of AI and Web3 is not just a simple technological upgrade, but a comprehensive systemic shift. Trading is gradually moving towards machine execution, while attacks are simultaneously becoming more automated and scaled. In this process, security, risk control, and compliance are transitioning from traditional "support functions" to essential infrastructures within the intelligent trading system. Efficiency and risk are now intertwined, growing together rather than occurring in separate stages. The faster the system operates, the greater the demand for robust risk control to keep pace.

In trading, AI and agent systems have made it easier to access information and execute trades, changing how people participate in the market and allowing more users to get involved in Web3 trading. However, this has also led to new risks, such as crowded strategies and execution errors. In terms of security, the automation of vulnerability discovery, attack generation, and money laundering has concentrated risks, causing them to escalate more rapidly and increasing the demands on the responsiveness and effectiveness of defense systems. In the areas of risk control and compliance, address profiling, path tracking, and behavioral analysis technologies have evolved from simple analytical tools into engineering systems with real-time processing capabilities. The emergence of machine payment mechanisms like x402 has further pushed compliance issues into a deeper exploration of how machines are authorized, constrained, and audited.

All of this leads to a clear conclusion: In the era of intelligent trading, what's truly scarce is not faster decision-making or more aggressive automation, but security, risk control, and compliance capabilities that can match the speed of machine execution. These capabilities must be designed as executable, composable, and auditable systems, rather than passive processes for post-event remediation.

For trading platforms, this means that while enhancing trading efficiency, risk boundaries, logical evidence chains, and human regulatory mechanisms must be deeply integrated into AI systems to maintain a balance between efficiency and security. For security and compliance providers, this means moving monitoring, early warning, and blocking capabilities to the forefront before funds spiral out of control and building a proactive defense and real-time response system.

BlockSec and Bitget agree that, in the near future, the key to the sustainable development of intelligent trading systems lies not in who adopts AI technology first, but in who can simultaneously implement both machine executability and machine constraints. Only when efficiency and risk control evolve together can AI truly become a long-term driver of the Web3 trading ecosystem, rather than an amplifier of systemic risks.

The integration of Web3 and AI is an inevitable trend in the industry's development, and security, risk control, and compliance are the core guarantees for ensuring the stable growth of this trend. BlockSec will deepen its focus on Web3 security, providing stronger, more reliable protection and compliance support through technology innovation and product iteration. Alongside industry partners like Bitget, we aim to foster the healthy and sustainable growth of the intelligent trading era.